By Adrian Mincher, Director at Earnix

Across the insurance sector, from boardrooms to back-office ops, a new and pressing question is being asked: how to effectively embrace agentic AI within a highly regulated industry?

As autonomous, generative AI systems move beyond analysis into action – making decisions, initiating processes, and adapting in real time – the stakes are rising fast. For an industry built on trust, regulation, and responsibility, this shift presents both extraordinary opportunity and significant risk. At the heart of the debate is a fundamental challenge: how do we harness the power of agentic AI while ensuring the human oversight and accountability that customers, regulators, and society expect?

Agentic AI, or autonomous generative AI, marks a fundamental shift from the passive predictive models that currently define AI deployments. These systems don’t just analyse or recommend, they also act. They make decisions, take initiative, and adapt dynamically to achieve outcomes, often across multiple domains. When designed and governed responsibly, the potential is enormous: accelerating decision-making, enhancing personalisation, and enabling real-time responses to complex customer needs.

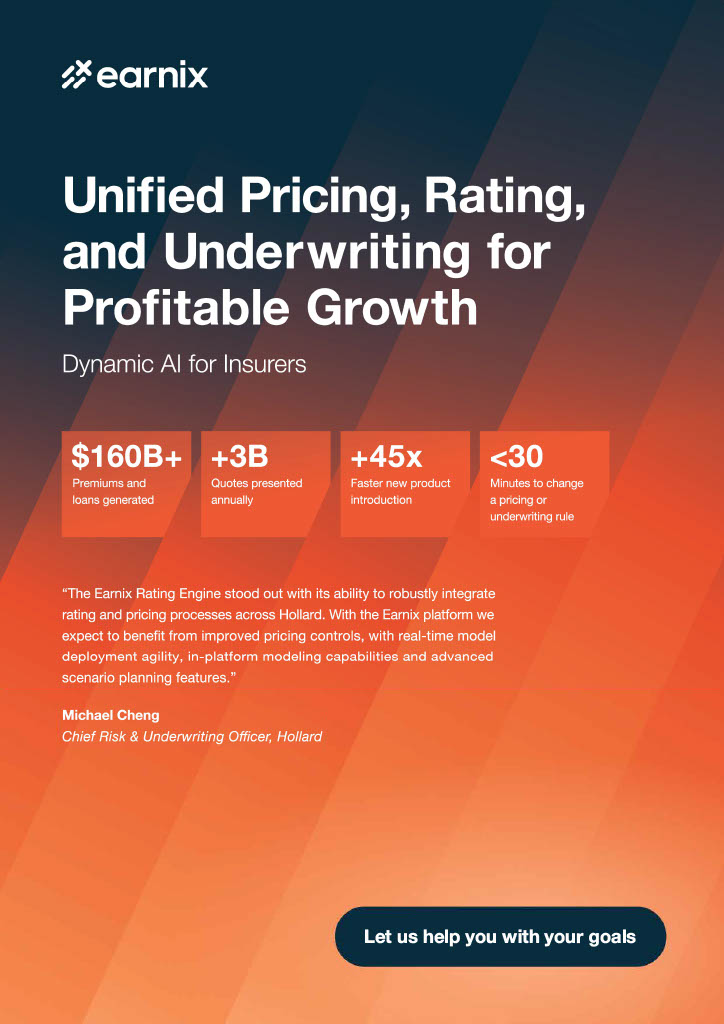

Unified Pricing, Rating & Underwriting for Profitable Growth.

The Earnix Rating Engine stood out with its ability to robustly integrate rating and pricing processes across Hollard. With the Earnix platform we expect to benefit from improved pricing controls, with real-time model deployment agility, in-platform modeling capabilities and advanced scenario planning features.

DYNAMIC AI FOR INSURERS

While a Deloitte forecast in late 2024 predicted that 25% of AI-using enterprises would deploy AI agents by 2025, the reality in insurance is more complex. The gap between strategy and execution remains significant, when there’s a clear strategy at all. Rather than acceleration, what we’re seeing are early, practical use cases such as claims triage, compliance monitoring, HR support, and customer engagement. These early adopters are beginning to demonstrate measurable benefits in efficiency, accuracy, and customer centricity. But autonomy without accountability is a risk no regulated industry can afford to ignore. That’s why the “human-in-the-loop” model is essential. Agentic AI must augment, not replace, human expertise and oversight. At Earnix, our acquisition of Zelros reflects this commitment. By blending predictive analytics with generative capabilities and embedding human judgment at the core, we are focused on delivering value-driven outcomes that meet regulatory expectations and customer needs alike.

Take customer personalisation. With agentic AI, we can proactively tailor product recommendations or adjust pricing dynamically within specific regulatory environments. But the system must also be explainable, fair, and aligned with regulations such as the UK’s FCA Consumer Duty principles. That means building agentic systems that operate within well-defined ethical and regulatory boundaries, guided by human experts who understand not only the technology but also its broader impacts.

We’re also entering an era of multi-agent systems – where multiple AI agents collaborate in real time to solve interdependent problems. This opens up transformative possibilities in areas like dynamic portfolio risk management or end-to-end claims resolution. But each additional layer of autonomy introduces complexity: around model validation, data lineage, decision traceability, and compliance.

Ultimately, the conversation around agentic AI in insurance is about responsibility. The systems we design today will define the standard for trustworthy, intelligent automation tomorrow. To earn and retain customer trust, we must ensure that these tools are both powerful and efficient, and also governed by frameworks that prioritise human values, regulatory integrity, and strategic oversight.